Hi Sander,

I am trying to apply the method we discussed yesterday, but I’ve just discovered that the projection method is not optimal for me, as I need EPSG:4326. Is there a way to use something similar to the following GDAL command?

gdalwarp -srcnodata 9.96921e+36 -dstnodata 9999 -geoloc -t_srs EPSG:4326 -overwrite $product_file.tmp.variable_mod.vrt $output_file

Note that to be able to apply such a command using the original S5P files from the Sentinel hub, I do the following steps, which return me a correct CRS (I can load them in GeoServer):

gdal_translate -of VRT HDF5:"${product_file}":longitude $product_file.tmp.lon.vrt

gdal_translate -of VRT HDF5:"${product_file}":latitude $product_file.tmp.lat.vrt

gdal_translate -of VRT HDF5:"${product_file}":$variable $product_file.tmp.variable.vrt

head -n1 $product_file.tmp.variable.vrt > $product_file.tmp.variable_mod.vrt

echo '<Metadata domain="GEOLOCATION">

<mdi key="X_DATASET">'$product_file'.tmp.lon.vrt</mdi>

<mdi key="X_BAND">1</mdi>

<mdi key="Y_DATASET">'$product_file'.tmp.lat.vrt</mdi>

<mdi key="Y_BAND">1</mdi>

<mdi key="PIXEL_OFFSET">0</mdi>

<mdi key="LINE_OFFSET">0</mdi>

<mdi key="PIXEL_STEP">1</mdi>

<mdi key="LINE_STEP">1</mdi>

</Metadata>' >> $product_file.tmp.variable_mod.vrt

tail --lines=+2 $product_file.tmp.variable.vrt >> $product_file.tmp.variable_mod.vrt

gdalwarp -srcnodata 9.96921e+36 -dstnodata 9999 -geoloc -t_srs EPSG:4326 -overwrite $product_file.tmp.variable_mod.vrt $output_file

My problem concerns the coordinates lon and lat created with harp_import. I get an error if I try to apply the same process to reproject data:

gdal_translate -of VRT HDF5:S5P_OFFL_L2__NO2____20220731_merged.nc:longitude prova.lon.vrt

HDF5-DIAG: Error detected in HDF5 (1.10.7) thread 1:

#000: ../../../src/H5F.c line 429 in H5Fopen(): unable to open file

major: File accessibility

minor: Unable to open file

#001: ../../../src/H5Fint.c line 1803 in H5F_open(): unable to read superblock

major: File accessibility

minor: Read failed

#002: ../../../src/H5Fsuper.c line 420 in H5F__super_read(): file signature not found

major: File accessibility

minor: Not an HDF5 file

ERROR 4: HDF5:S5P_OFFL_L2__NO2____20220731_merged.nc:longitude: No such file or directory

If I try gdalinfo over the obtained files, I see the following:

**gdalinfo S5P_OFFL_L2__NO2____20220731_merged.nc**

Driver: netCDF/Network Common Data Format

Files: S5P_OFFL_L2__NO2____20220731_merged.nc

Size is 512, 512

Metadata:

NC_GLOBAL#Conventions=HARP-1.0

NC_GLOBAL#datetime_start=8227.443437696758

NC_GLOBAL#datetime_stop=8247.609589328702

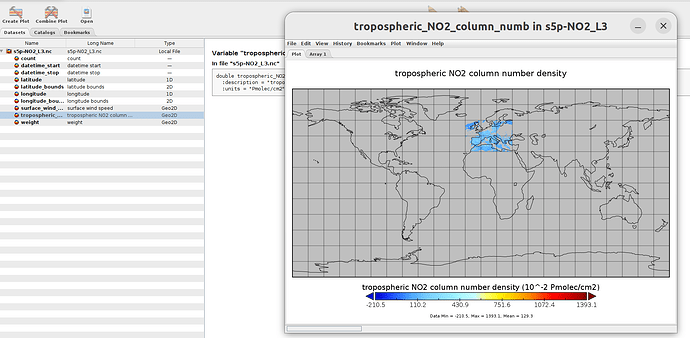

NC_GLOBAL#history=2023-06-13T11:01:10Z [harp-1.17] harp.import_product('../eodata/sentinel5p/no2/S5P_OFFL_L2__NO2____202207*.nc',operations='tropospheric_NO2_column_number_density_validity>75;keep(latitude_bounds,longitude_bounds,datetime_start,datetime_length,tropospheric_NO2_column_number_density, absorbing_aerosol_index);derive(datetime_start {time} [days since 2000-01-01]);derive(datetime_stop {time}[days since 2000-01-01]);exclude(datetime_length);bin_spatial(801,30,0.05,901,-15,0.05);derive(tropospheric_NO2_column_number_density [Pmolec/cm2]);derive(latitude {latitude});derive(longitude {longitude});latitude <= 85[degree_north];latitude >= 30[degree_north];longitude >= -15 [degree_east];longitude <= 30 [degree_east];count>0',reduce_operations='squash(time, (latitude, longitude, latitude_bounds, longitude_bounds));bin()')

Subdatasets:

SUBDATASET_1_NAME=NETCDF:"S5P_OFFL_L2__NO2____20220731_merged.nc":tropospheric_NO2_column_number_density

SUBDATASET_1_DESC=[1x800x900] tropospheric_NO2_column_number_density (64-bit floating-point)

SUBDATASET_2_NAME=NETCDF:"S5P_OFFL_L2__NO2____20220731_merged.nc":absorbing_aerosol_index

SUBDATASET_2_DESC=[1x800x900] absorbing_aerosol_index (64-bit floating-point)

SUBDATASET_3_NAME=NETCDF:"S5P_OFFL_L2__NO2____20220731_merged.nc":weight

SUBDATASET_3_DESC=[1x800x900] weight (32-bit floating-point)

SUBDATASET_4_NAME=NETCDF:"S5P_OFFL_L2__NO2____20220731_merged.nc":latitude_bounds

SUBDATASET_4_DESC=[800x2] latitude_bounds (64-bit floating-point)

SUBDATASET_5_NAME=NETCDF:"S5P_OFFL_L2__NO2____20220731_merged.nc":longitude_bounds

SUBDATASET_5_DESC=[900x2] longitude_bounds (64-bit floating-point)

Corner Coordinates:

Upper Left ( 0.0, 0.0)

Lower Left ( 0.0, 512.0)

Upper Right ( 512.0, 0.0)

Lower Right ( 512.0, 512.0)

Center ( 256.0, 256.0)

Why longitude and latitude do not appear in the SUBDASET?

I guess this is the reason why I cannot perform the extract of the longitude and latitude variables.

Is there a way to keep longitude and latitude as actual variables so that I see them as for latitude_bounds and longitude_bounds?

I tried the following operations:

operations = ";".join([

"tropospheric_NO2_column_number_density_validity>75",

"keep(latitude_bounds,longitude_bounds,datetime_start,datetime_length,tropospheric_NO2_column_number_density, absorbing_aerosol_index)",

"derive(datetime_start {time} [days since 2000-01-01])",

"derive(datetime_stop {time}[days since 2000-01-01])",

"exclude(datetime_length)",

"bin_spatial(801,30,0.05,901,-15,0.05)",

"derive(tropospheric_NO2_column_number_density [Pmolec/cm2])",

"derive(latitude {latitude})",

"derive(longitude {longitude})",

"latitude <= 85[degree_north]",

"latitude >= 30[degree_north]",

"longitude >= -15 [degree_east]",

"longitude <= 30 [degree_east]",

"count>0",

"exclude(count)",

"keep(latitude, longitude, datetime_start, datetime_stop, tropospheric_NO2_column_number_density)"

])

But I get the following error:

CLibraryError Traceback (most recent call last)

Cell In[20], line 42

40 s5p_fps = sorted(glob.glob(q))

41 print('Merging: ', date)

---> 42 no2_map=harp.import_product(files_in, operations, reduce_operations=reduce_operations)

43 # harp.export_product(no2_map, output_file, operations="squash(time, (latitude, longitude, latitude_bounds, longitude_bounds))")

44 harp.export_product(no2_map, output_file)

File ~/miniconda3/envs/SP5_harp_2/lib/python3.11/site-packages/harp/_harppy.py:1254, in import_product(filename, operations, options, reduce_operations, post_operations)

1252 try:

1253 if _lib.harp_product_append(merged_product_ptr[0], c_product_ptr[0]) != 0:

-> 1254 raise CLibraryError()

1255 finally:

1256 _lib.harp_product_delete(c_product_ptr[0])

CLibraryError: products don't both have variable 'tropospheric_NO2_column_number_density_count'

Sorry for bothering you again, but I have to understand if Harp is the right library for me.

Monica