Dear all,

I am experiencing some trouble with the HARP command line tools (v1.9.2) and VISAN (v4.0).

I want to create an animated plot of the UVAI (S5P_OFFL_L2__AER_AI) generated by the Australian fires between 29/12/2019 and 08/01/2020 (following this ESA animation on Australian fire).

I am hence downloading all products located between Eastern Australia and Western South America for dates between 29/12/2019 and 08/01/2020. What I do first is the following:

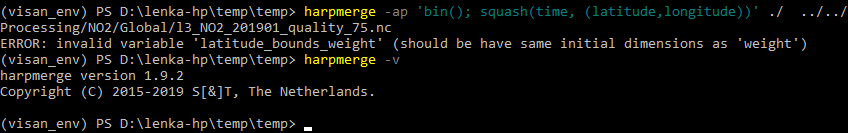

harpmerge -ap ‘bin(); squash(time, (latitude,longitude))’ -a ‘latitude > -42 [degree_north]; latitude < -7 [degree_north]; absorbing_aerosol_index_validity > 80; bin_spatial(176,-42,0.2,1801,-180,0.2); derive(longitude {longitude}); derive(latitude {latitude})’ S5P_OFFL_L2__AER_AI_20191229*.nc …/Processing_daily_map/Australia_fire_20191229.nc

I do this for each day between 29/12/2019 and 08/01/2020 so that I have a regular map between Eastern Australia and South Western America for each of the 11 days (with the same Lat/lon grid). The generation of the daily products over the South Pacific works well. Then I simply merge the 11 resulting maps into a single file (to create an animated plot within VISAN) with:

harpmerge Australia_fire.nc …/Australia_20191229_20200108/Australia_fire_all.nc*

But when trying to use the wplot command on the Australia_fire_all.nc product in VISAN, it gives me the following error:

raceback (most recent call last):

File “”, line 1, in

File “/home/rus/anaconda3/lib/python3.7/site-packages/visan/commands.py”, line 642, in wplot

dataSetId = plot.AddGridData(latitude, longitude, data)

File “/home/rus/anaconda3/lib/python3.7/site-packages/visan/plot/worldplotframe.py”, line 485, in AddGridData

raise ValueError(“plot latitudeGrid should be the same for all grids”)

ValueError: plot latitudeGrid should be the same for all grids

Which I do not understand because I browsed the Australia_fire_all.nc product with VISAN and every variable of interest (latitude, longitude and absorbing aerosol index) has the dimension 11 x 175 x 1800 and hence has the same latitude grid.

Sometimes there are differences of 10^-14 between some latitude coordinates (same kind of differences for longitude coordinates) from one day to an other. But I do not know whether my issue is due to these (tiny compared to the grid resolution) differences and how to go around them because I applied the exact same command line to generate each daily products.

Also, I tried to use the longitude_range operation to handle the international lines but I did not manage to make it work correctly:

Longitude_range(142 [degree_east], -82 [degree_east]).

Because it seems to exclude the measures between [142,180] and between [-180,-82]. I tried many other possibilities but it never gave me the expected results.

For your information, I had done the same process to study UVAI generated by a fire over California and the process was working very well. Except that I did not need to merge the products day by day ahead because the area was covered by a single product per day. Here, as the area is way larger, I would need to create merged product day by day and then merge them all which seems to work but then VISAN doesn’t seem to be able to handle it.

I am probably doing something wrong but I cannot figure out what.

Any help would be appreciated!

Thank you in advance

Simon