Dear team,

I downloaded the data

S5P_OFFL_L2__CO_____20200610T210512_20200610T224642_13783_01_010302_20200612T104836.nc

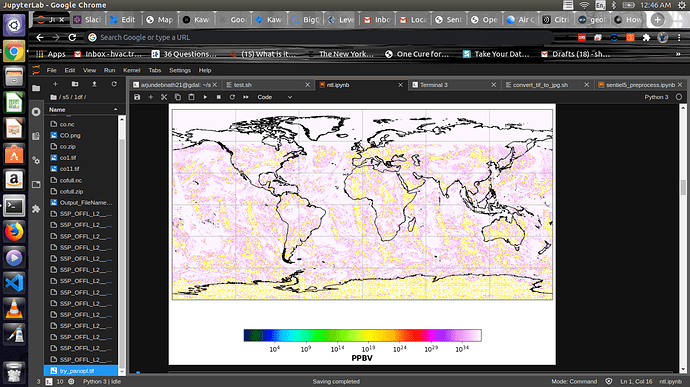

and was trying to create a geotiff image from this.

As mentioned I used harp to convert to an intermediate nc file using this :

harpconvert -a ‘CO_column_number_density_validity>50;bin_spatial(920,-5,0.025,1160,86,0.025);’

S5P_OFFL_L2__CO_____20200610T210512_20200610T224642_13783_01_010302_20200612T104836.nc testing.nc

as wel as this :

harpconvert -a ‘CO_column_number_density_validity>50;keep(datetime_start,datetime_length,CO_column_number_density,latitude_bounds,longitude_bounds);bin_spatial(2001,-90,0.01,2001,-180,0.01);derive(CO_column_number_density)”,derive(CO_column_number_density)”,post_operations=

“bin();squash(time,

(latitude_bounds,longitude_bounds));derive(latitude

{latitude});derive(longitude {longitude})’

S5P_OFFL_L2__CO_____20200610T210512_20200610T224642_13783_01_010302_20200612T104836.nc ll3.nc

It does covert to the new nc files, however, when i try to open this

using gdal and read the array it shows a unique single value

import gdal

sc=gdal.Open(’/home/arjundebnath21/s5/ll3.nc’,gdal.GA_ReadOnly)

band_ds = gdal.Open(sc.GetSubDatasets()[0][0], gdal.GA_ReadOnly)

band_array = band_ds.ReadAsArray()

np.unique(band_array)

The array has the value array([9.96920997e+36]), which i believe is

the Nodata value.

Can you please help me understand why this is happening ?

Also , After converting to a h5 file , if I want to convert it to a

tiff file , there are different bands for the lat and long bounds (

whose shapes are different from the CO column density ) . Can you also

please guide how to create a single geotiff file from this ?

Your help would be much appreciated .

Thanks and regards,

Arjun